This post is part of the SATF work.

The picture would not be complete without describing the simulation auto acceptance tests problems revealed over the mobile robot control system development. The first and the most problem is that Microsoft abandoned MRDS support. Since this deprives me of the hope to see any of the graver MRDS flaws ever fixed, I could wrap up the section right here. But then I have to name these flaws, at least. And to look impartial, I will balance them with some homebred flaws.

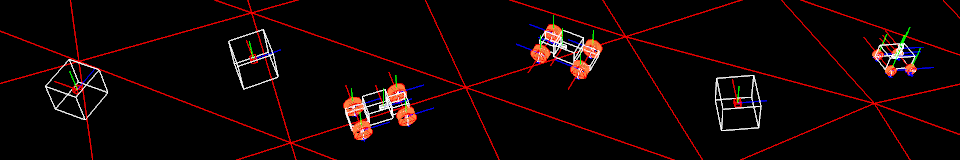

The worst one is the unstable simulator. The stress of thousands entities being added and deleted during the probabilistic testing procedure is too much for it. For some physics environments, the simulator occasionally fails with AccessViolation exception. And even this result I owe to Gershon Parent who came up with the workaround for the similar simulator instability blocker issue in MRDS forum when I was developing the test runner. And even when the simulator does not fail, I doubt its general adequacy, because at least in one case it proved to be utterly inadequate, in other – overly sensitive.

The other significant MRDS, rather CCR, issue is unwieldiness of awkward “yield operator and iterator” based state machine construction used as a primary asynchrony implementation pattern. At the time, it granted unique and unmatched capabilities concerning nonblocking concurrency at expense of depriving methods of semantical return values. This severe limitation could be justified then, but not now, when Microsoft has advanced .Net framework concurrency capabilities with async/await operators and TPL library, which provide the same facilities transparently to a developer.

Yet another problem is the almost prohibitively steep MRDS learning curve: you just can’t fare without reading at least half of a book (the book – Kyle Johns’s “Professional Microsoft Robotics Developer Studio”). And that is too much for MRDS to become mainstream. Visual programming language, being part of MRDS, evidently, was an attempt to alleviate the problem, apparently unsuccessful.

Speaking of the essentially simulation acceptance tests problems, the long test suite execution time is the leader. Execution of a conventional software automated acceptance test suite usually takes a lot of time, orders of magnitude more than of a unit test suite, and the acceptance tests for robotics have not become an exception. The lengthy operations of physics and software environments resetting plus inherently long running tests themselves, multiplied by hundreds of probabilistic approach runs, leave no chance to “continuous” style of test suite execution, which implies the entire test suite rerunning on each change introduced. The moves like discerning between static and dynamic objects (mentioned in the “Test flow” section) or early results accepting (mentioned in the “Test runner implementation” section) can make it times shorter, but can not beat its order of growth. One rough-and-ready but practical way of nondeterministic tests handling is the “fast check” test runner execution mode, which reruns probabilistic tests only until their first success. It assumes that successful tests keep their success rate intact and thus do not take more than a couple of tries, while outnumbered failing ones fail entirely (not just more often), which also does not take much time to discover. Therefore, full blown probabilistic test suite execution goes to the niche of nightly builds, while faster paced activities could be handled by the focused test execution and the fast check mode.

The other issue concerns initial construction of a test physics environment. The simulation engine GUI ostensibly provides the intuitive drag-and-drop tool for environment construction. However, it proved to be unreliable, and all environments had to be constructed via code. Thus all the tests, along with the xml stated environment description, have the accompanying construction code. But the support for such code management was not implemented in the testing framework, which ended up with the ad-hoc builder service containing commented out methods for initialization of each test’s environment. And that is inconvenient and underdeveloped. At the same time, service manifests creation went without a hitch by virtue of the DSS Manifest Editor tool, providing effortless and user friendly way for service composition.

Although, the last version of MRDS was not its first, it was still immature, especially concerning the simulator engine responsible for the graver problems of the simulation auto acceptance tests. The essential problem of the tests themselves was the very long test suite execution time. The other problems could be either fixed or alleviated through development of extra tooling and infrastructure.

| Previous | Next |