This post is part of the SATF work.

Both problems stated in the “Problem” section, continuous change control and the laborious testing procedure, to me, burdened with agile bigotry, seemed familiar. It was no wonder, since similar questions were posed and solved as a part of eXtreme Programming software development methodology through wide testing automatization.

XP exploits automated testing on every level of the process. The main bulk of development is driven by automated unit tests, providing freedom for constant refactoring and covering continuous changes on the component level. Integration tests take care of the more complex functionality spanning multiple components. In the development of conventional software, the technological gap between unit and integration tests is negligible, which is why they are usually discerned only from the theoretical perspective. The highest level functionality verification (usually in a form of use cases) is administered by the automated acceptance tests. But they also contribute in some other, no less important, ways: improve communications with the customer, allow precise requirement statement, make for the executable documentation. Automated acceptance testing requires the complex infrastructural support. Aside from the customer level programming language and tools (like RSpec and Cucumber) there should exist tools for automated environment and system manipulation and assessment of the test results. In general, automated testing takes over laborious and repetitive tasks from the developers and testers. And thus backs up the former ones in their continuous design improvement (every change is guaranteed either not to break the previously implemented functionality or to fail some automated test and elicit a problem) and frees the latter ones for really valuable testing activities (the time consuming routine, like environment configuration and test case scenario execution, is automated).

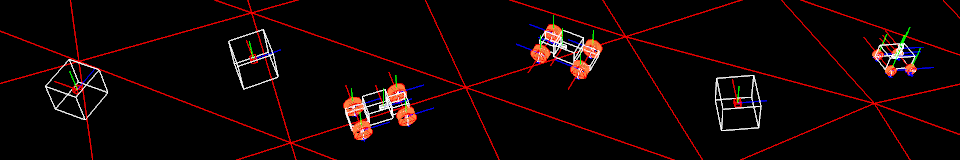

Although automated unit tests could and ought to be used in the software development of any kind, they can’t help with the problems stated earlier, because the problems’ locus resides on the higher layers. And the integration, much less acceptance, tests, being extremely labour-consuming in robotics, literally cry for automation, which is the main goal of this work. The only conceivable approach to pursuing this task is running of the tests inside a simulator where you can manipulate and query the state of the robot and environment objects programmatically, and rerun such tests free of charge. In general, every such simulation auto test should conform to the following template:

- initialization of physics environment – populating the simulated world with test fixture objects in a predefined state

- initialization of software environment – launching and configuring all the software needed for a program under test

- execution of a scenario – issuing a sequence of commands for involved physics and software entities

- assessment of a result – checking if the resultant state of the world and software satisfies a test criterion after a specified time period

A set of such tests corresponding to the body of system functionality will bring the following into effect:

- labour automation. Extremely laborious tasks of reproducing robotics test cases goes to the machine.

- better test coverage. Testers, relieved by automation, could produce more and better test cases.

- response to continuous change. Developers, backed up by the extensive automated top-down test suite, could introduce changes to software freely.

- system contract. Customers and users (provided some additional tooling), construing the high-level test suite as a breed of executable documentation, could state the requirements precisely and prove the software’s correctness on demand.

As a matter of fact, all of the above as the effect of test automation sounds general enough to be true for every software field. But due to the immense robotics testing unwieldiness, the effect is more profound there. Interestingly enough, on the contrary to the conventional software, it seems more convenient (at least in MRDS, about which I will talk later) to place integration tests closer (in terms of technology) to acceptance than to unit tests. So from now on, the phrase simulation auto acceptance testing will imply the automated simulation tests, capable of taking over the responsibilities of both integration and acceptance testing. And these tests would be kindly requested to handle the stated problems.

| Previous | Next |