This post is part of the SATF work.

The valuable artefact of auto acceptance testing is a test suite. This section reviews the test suite of the system which was developed in parallel and served as a main testbed for the simulation acceptance testing framework itself. It also shows some curious tests of a side project with an entertaining cartoon as a digestive.

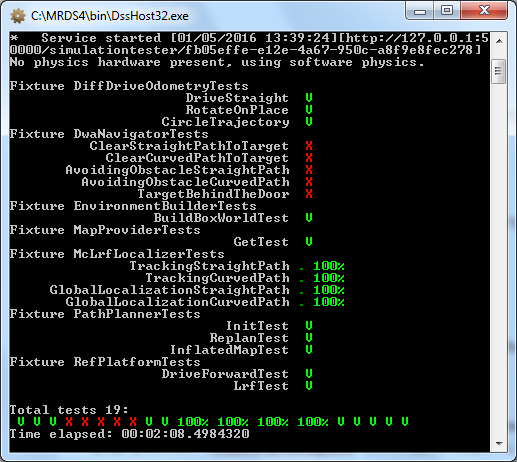

By now, there are 19 tests in total in the differential drive mobile robot control system project. Four of them (of the Monte Carlo localization service) are probabilistic and discussed in the “Probabilistic testing” and “Test example” sections in greater details. The videos of some tests could be found in the Gallery. This test suite concerns:

- the differential drive odometry service, checking correct odometry calculation for the different trajectories;

- the dynamic window approach navigation service, checking navigation toward a goal for the various environment configurations;

- the builder service, checking physics environment construction for a given map;

- the map provider service, checking providing of a fixed environment map;

- the Monte Carlo localization service, checking robot localization for the different modes and different trajectories;

- the path planner service, checking planning of a path;

- the differential drive platform entity, checking the drive and sensor components of the simulated robot

The tests for the builder, map provider and path planner do not depend on the interactions with environment and are essentially integration tests, adding not so much value. The other four fixtures, which test the software interacting with actuators and sensors, are truly valuable. It takes approximately 35 minutes to run all the tests in the slowest testing mode with physics and graphics simulation on and only 2 minutes in the fast check mode.

Differential drive tests full check execution log |

Differential drive tests fast check execution log |

The failing DWA Navigator tests illustrate the problem mentioned in the “Time handling” section: physics simulation with improved quality was used during the development of the tests and code (not because of the stricter requirements to simulation, but as a mean to slow down the whole process in order to simplify debugging), which provided the algorithm with more time for its processing, whereas that time of the faster mode testing occurred to be insufficient.

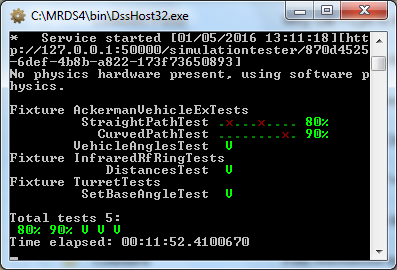

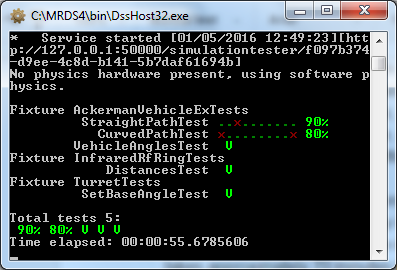

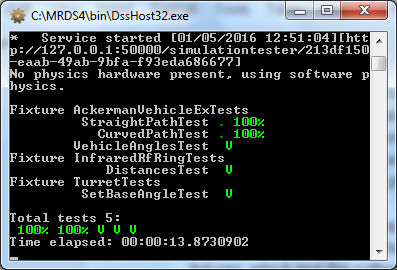

There are five more tests in the Ackerman vehicle side project, two of them were also discussed in the “Probabilistic testing” section. They concern:

- the Ackerman 4×4 vehicle model, checking its passive stability upon cross country terrain;

- the infrared rangefinder ring model, checking its distance measurements for the different environment configurations;

- the turret model, checking its actuation

The Ackerman vehicle model consisting of several physics primitive with nontrivially suspended joints does require physics simulation with improved quality. That’s why these 5 tests in the slowest mode take 12 minutes, but surprisingly, this time reduces to 1 minute, graphics simulation being turned off. The fast check mode takes only 15 seconds.

Ackerman vehicle tests full check execution log |

Ackerman vehicle tests without rendering check execution log |

Ackerman vehicle tests fast check execution log |

Here is the cartoon demonstrating the latter two devices mounted on a vehicle just for your and my entertainment:

Camera turret and infrared rangefinder ring mounted on manually controlled Ackerman vehicle

Camera turret and infrared rangefinder ring mounted on manually controlled Ackerman vehicle

The 19 tests of the first project could be considered as a self-sufficient set. They cover happy pathes of all of the implemented services of the differential drive mobile robot control system. When green, they make me feel protected and free to proceed with new functionality.

| Previous | Next |