This post is part of the SATF work.

The good news is that all the words in the STF posts before were not in vain. It turned out that simulation acceptance tests make, at least, some sense, if not money. This section presents the various, sometimes unexpected, aspects of that sense.

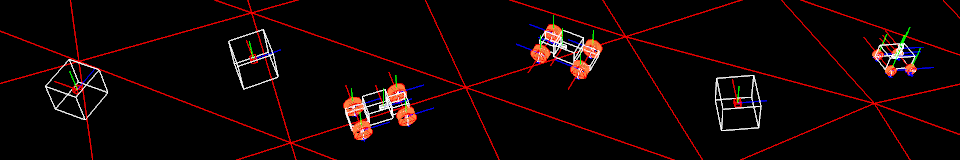

Undeniably and surprisingly, the simulation acceptance tests happened to have the most impact on the good old code-test-debug phase of a software development process. In a perfect world, the greater part of testing falls on a developer’s lot. Even taking into account that all modules’ unit tests were in place, it took much debugging and testing to integrate the functionality and make it work inside the simulator. Therefore for me, a perfect world developer, tests automation – the ability to create a test once and for all and run it for free until the thing works – streamlined the procedure immensely. The test automation facilitated experimentation and investigation as well: the ease of any environment-software setup reinstantiation eradicated this kind of a technical drag from the process.

Although this time there were no behaviors to fuse as was described in the “Problem” section, resilience and safety granted by simulation auto tests found a way to approve themselves. The changes with obscure side effects in distant parts of the system were diligently watched, and the problems were elicited. The tests broke from time to time, but not because they were brittle – because the changes introduced were breaking. One recurrent theme was a refactoring of the originally poorly modeled differential drive platform code – the code at the bottom of the whole system. Eventually, it was virtually rewritten, but through its gradual evolution, the odometry and localization tests kept broking, flagging fresh breaking changes.

The organizing and guiding role of auto acceptance tests – rather Behaviour Driven Development approach – also proved well. As promised, the tests structured implemented functionality, provided immediate confirmation of allegedly correct functionality operational state and tracked the progress. Even in one person team, it was very assuring to watch all the previously implemented services work. The necessity to state the test criteria explicitly and unambiguously made the implemented functionality capabilities very clear. E.g the localization service after 10s of robot moving along the straight trajectory can localize the robot within the tolerance of 10cm. What is more, the same necessity often forced the customer (me) to clarify for himself the requirements to the system.

As for the approach to probabilistic testing, unfortunately, it was not exploited extensively enough to declare it a success. But at least, in addition to the successful examples stated in the “Probabilistic testing” section, it fixed the annoying case of a single transient and perfectly acceptable test failure, which had ruined all the test suite run and demanded a manual intervention before. A curious fact, unexpected synergy emerged from the interaction between probabilistic testing and the code contracts technology, which was used extensively throughout the development. The code contracts allow to add runtime checked pre- and postconditions to the class methods. Thus, hundreds of method calls with slightly different inputs performed during the probabilistic testing, augmented by auto checked conditions, started to reveal the subtle, extremely rarely occurring, bugs, usually caused by concurrency or computational issues. In the normal conditions, it would be hard even to notice them, much less trace.

Debugging is very important. The ease of simulation acceptance tests debugging could determine the fate of the whole thing. By virtue of the specially developed MS Visual Studio add-in, it became no more trouble than debugging of a conventional multithreaded application. The add-in allows to start and debug any DSS manifest from within Visual Studio. Therefore including the test runner manifest (like everything, the test runner is a DSS service) into a test project combined with the Wip test fixture attribute parameter, provided the debugging experience comparable to the standard Visual Studio “Debug unit test” command. This is hard to underestimate.

All in all, the simulation acceptance tests occurred to be good. They dramatically streamlined the development process, provided safety net for the code evolution, confirmed the benefits of the behavior driven development in its guiding, organizing and requirements developing roles. The approach to probabilistic testing was not exploited extensively enough to declare it a success – but enough to call it tentatively useful. Besides, the tests were easy to debug.

| Previous | Next |