This post is part of the SATF work.

Robotics, just like other contemporary research fields, is inherently based on software. Although this type of software deviates considerably from a conventional one, it looks only reasonable for it to adopt and exploit the methods proved useful in traditional software development. This work presents one of such attempts – adaptation of automated acceptance testing to robotics software development.

Everything has started from an urge to develop software for a robot conceived as a robotic waiter. Its first layer of functionality contained different competencies of a mobile platform: obstacle avoidance, localization, path planning etc. In some previous work, a mobile robot control system was behavior based, and newly developed behaviors often changed the behavior of the whole system in unexpected ways, while its correctness could be verified only by laborious manual execution of some test set. This was a twofold problem, which is described in the “Problem” section. The author’s agile software development background called for automated acceptance testing as a remedy. The proposed way of its applying to robotics (a general pattern of simulation acceptance test), and the benefits it is to bring are described in the “Solution” section. Such robotics acceptance tests should take into account nondeterministic aspects of robotics systems, because the large part of robotics has to deal with nondeterministic environments (which is essentially its main difference from conventional programs). The approach to testing of such things is presented in the “Probabilistic testing” section and reduces to multiple rerunning of the tests in order to assess not only single test run result, but a success rate of it. The last section, “Test example”, of the first part (poetically called “Contemplating”) provides the taste of the idea with one tangible example – testing probabilistic robot’s localization service, with code and pictures.

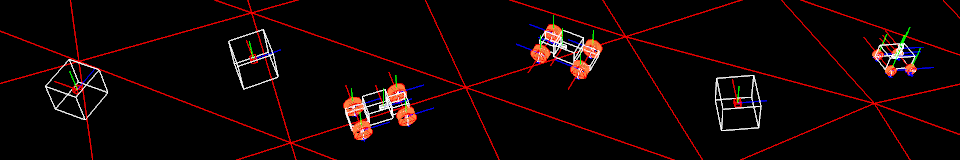

Part II “Designing” starts with a review of Microsoft Robotics Developer Studio in the “MRDS review” section. Indeed, all of the above could be performed only in a simulated environment which, along with powerful infrastructure, concurrency paradigm and .Net environment, is helpfully provided by MRDS. This wonderful potential is accompanied by some obligations: MRDS ways heavily influence both design and implementation aspects of the testing framework. The “Test flow” section explores the flow of simulation acceptance test in the MRDS context. Test execution starts with population of the simulated physics environment and launching of the software under test. Then the test scenario is executed which ends with some assertions on the environment and the software conditions. Incorporation of multiple test reruns strategy of the probabilistic testing approach introduces some extra quirks to the flow (such as division of all participating entities into static and dynamic). From the static point of view, each test is described by three components: description of physics environment, description of software configuration and the test scenario. The first two are declarative xml pieces, the last has to be written in C# code. The “Test structure” section works through this and states not numerous conventions of theirs, mostly concerning the test scenario constituents: setup, start, test etc.

Part III “Implementing” deals with more earthly issues, the first of which is tests management in the “Tests organisation” section. Essentially, all the components of a single test are glued together by the test name: xml files must be named according to some convention, and scenarios in the code are marked with the corresponding attributes. A number of test scenarios is compiled into an assembly, which then goes to the test runner along with accompanying xml files. On its start, the test runner scans this assembly and collects all the tests inside. The next section “Time handling” is very educative and fun in some sense: it shows how ostensibly innocuous task if not heeded sometimes could turn up to be excruciatingly complicated. The problem is to substitute the real time, readily accessible for MRDS software, with the simulation time able to proceed slower or faster. The tortuous implementation passes the simulation time from a special simulation entity to a special service and, eventually, to a client-faced object. On the contrary, implementation hardships of the “Test runner service” section, which describes issues of the test runner tool implementation, evoke nothing but grief and dejection due to their unnecessary complications.

“The test suite” section of the last “Analyzing” part overviews the resultant test suite for the system having served as a main testbed for the testing framework itself. The “Good things” and “Bad things” sections are dedicated to the positive and the negative results of the work. The most significant one among the positive was the general applicability of automated acceptance testing to robotics, the realization that it has brought genuine benefit in many ways: massive automation of testing activity, control of continuous change, requirement organization and documentation, precise requirement statement etc. All the while, the probabilistic testing approach was not exploited extensively enough to draw some definitive verdict, but it approved itself in several cases and was recognized as tentatively useful. I would lay responsibility for the most of the framework’s revealed problems upon MRDS, for its vices are many. Unstable physics simulator, unwieldy yield operator syntax and steep learning curve, to name a few. But it can not be blamed for others, like extremely long test suite execution time (due to multiple test runs) and lack of some necessary tooling.

Complying to the ideas and using the tools from the presented work, I have developed the control system for the mobile robot. Although this control system is very crude, it captures some key features of robotics software in general. Its test suite contains a considerable number of plain and probabilistic tests. And during the development, automated acceptance testing looked recommendable in all of its roles and senses. All of these allows me to contend that automated acceptance testing is applicable and distinctly beneficial to the robotics software.

| Next |